While everyone talked about NVIDIA and AMD GPUs, you’ve missed this one.

NVIDIA's next-generation AI chips physically cannot exist without high-bandwidth memory.

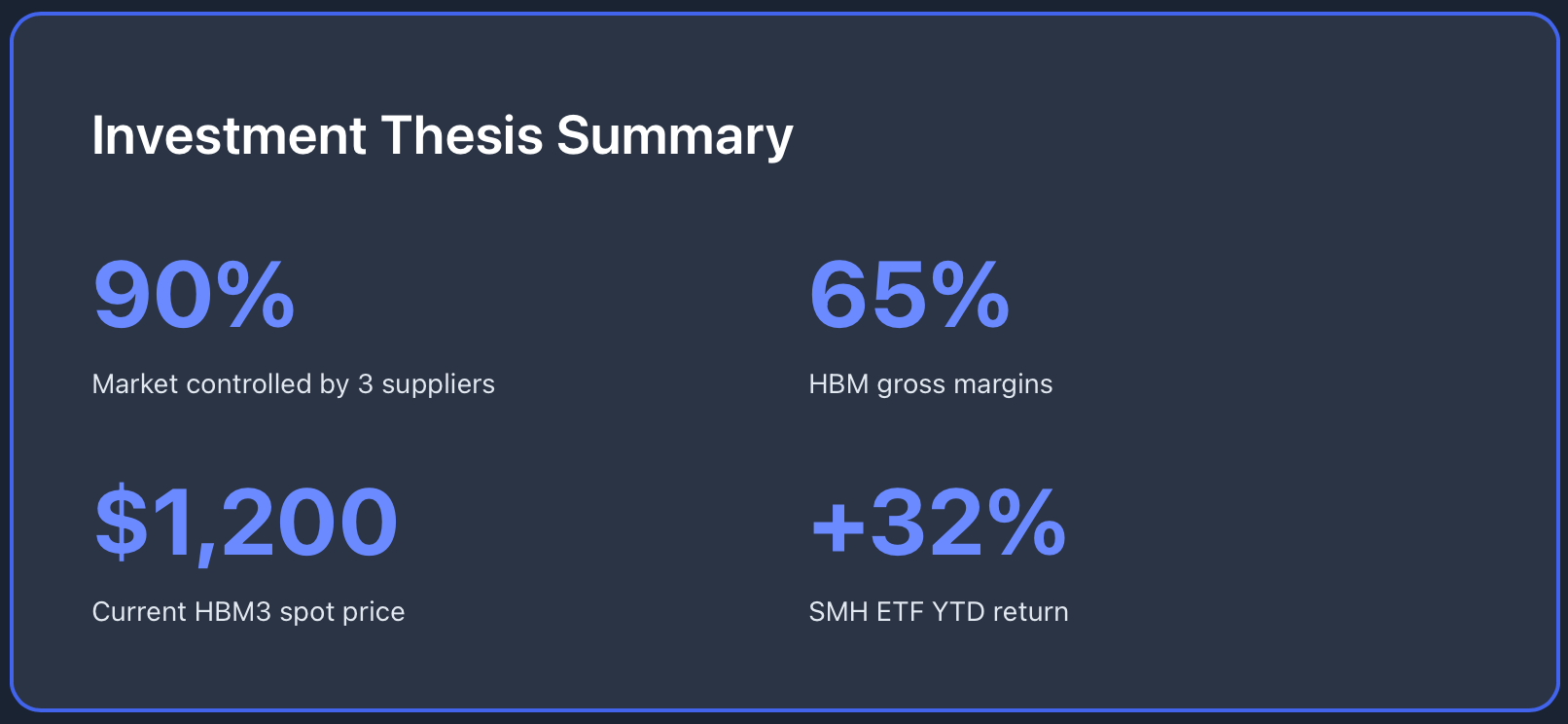

And the three companies that control 90% of global HBM production, Samsung, SK Hynix, and Micron, have sold out their entire 2026 capacity.

Server DRAM prices are climbing 60-70% in Q1 alone. The semiconductor ETF SMH is up 32% YTD, with NVIDIA representing 19% of its weight.

This is supply chain math.

The Numbers Behind the Shortage

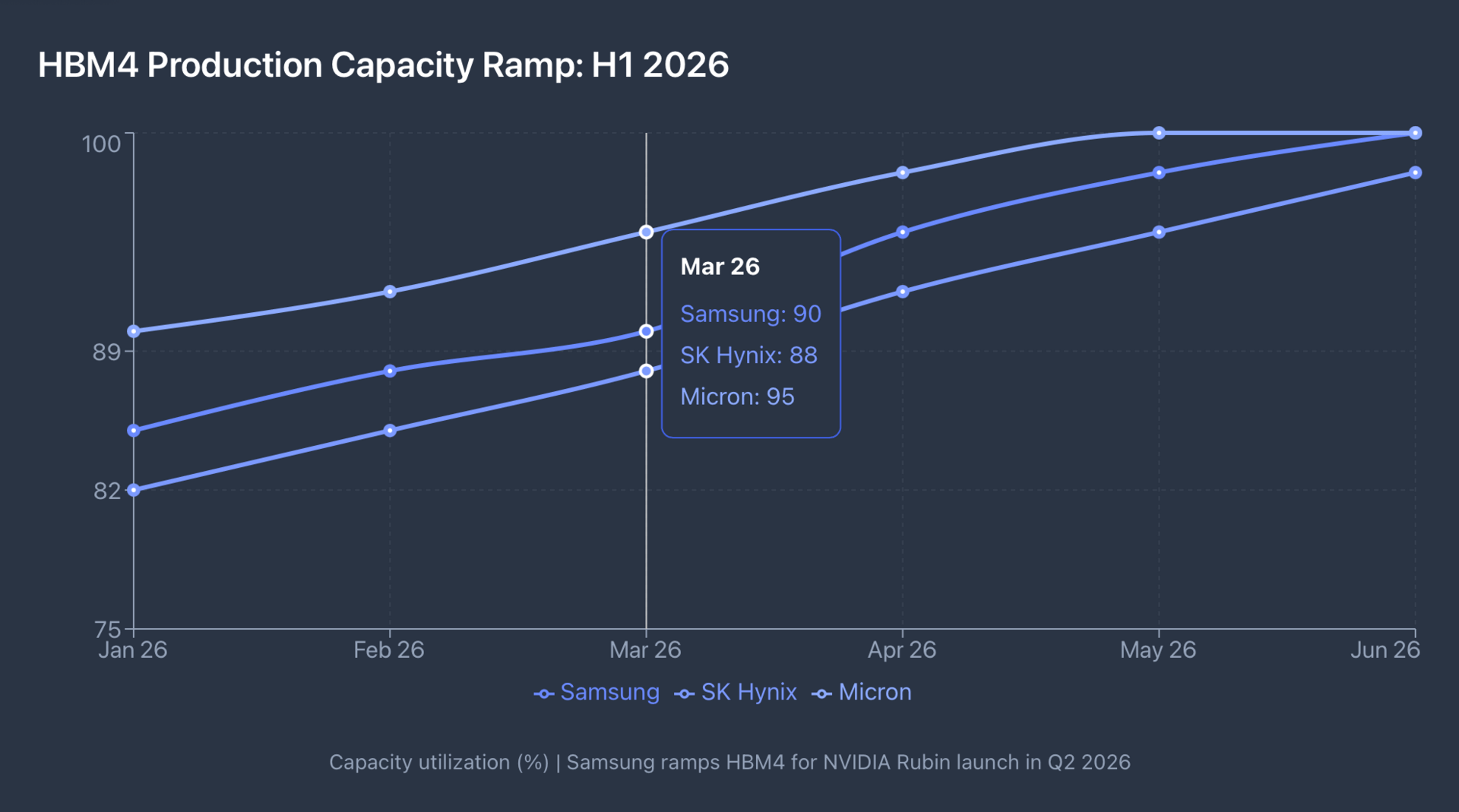

Micron confirmed in late 2025 that its HBM capacity is completely sold out through 2026. Samsung and SK Hynix followed with similar statements. The timing matters because NVIDIA's upcoming Rubin architecture, featuring 88 cores and requiring HBM4 memory, launches in Q2 2026. Samsung secured the exclusive contract for Rubin's HBM4 supply.

But here's the constraint: HBM4 production requires 16-Hi stacking technology, which only three manufacturers can produce at scale. Samsung, SK Hynix, and Micron are racing to meet demand that exceeds their combined output by significant margins.

TrendForce reports that server DRAM prices will increase 60-70% in Q1 2026 as hyperscalers like Google and Microsoft compete for limited inventory. This is not a temporary spike. The shortage persists because building new HBM fabrication capacity takes 18-24 months and requires capital expenditures exceeding $10 billion per facility.

The downstream effects are already visible. NVIDIA reduced gaming GPU production by approximately 40% to prioritize AI chip manufacturing. PC and smartphone manufacturers are facing higher RAM costs as memory suppliers redirect production capacity toward higher-margin HBM products.

Is your company experiencing memory procurement challenges in 2026?

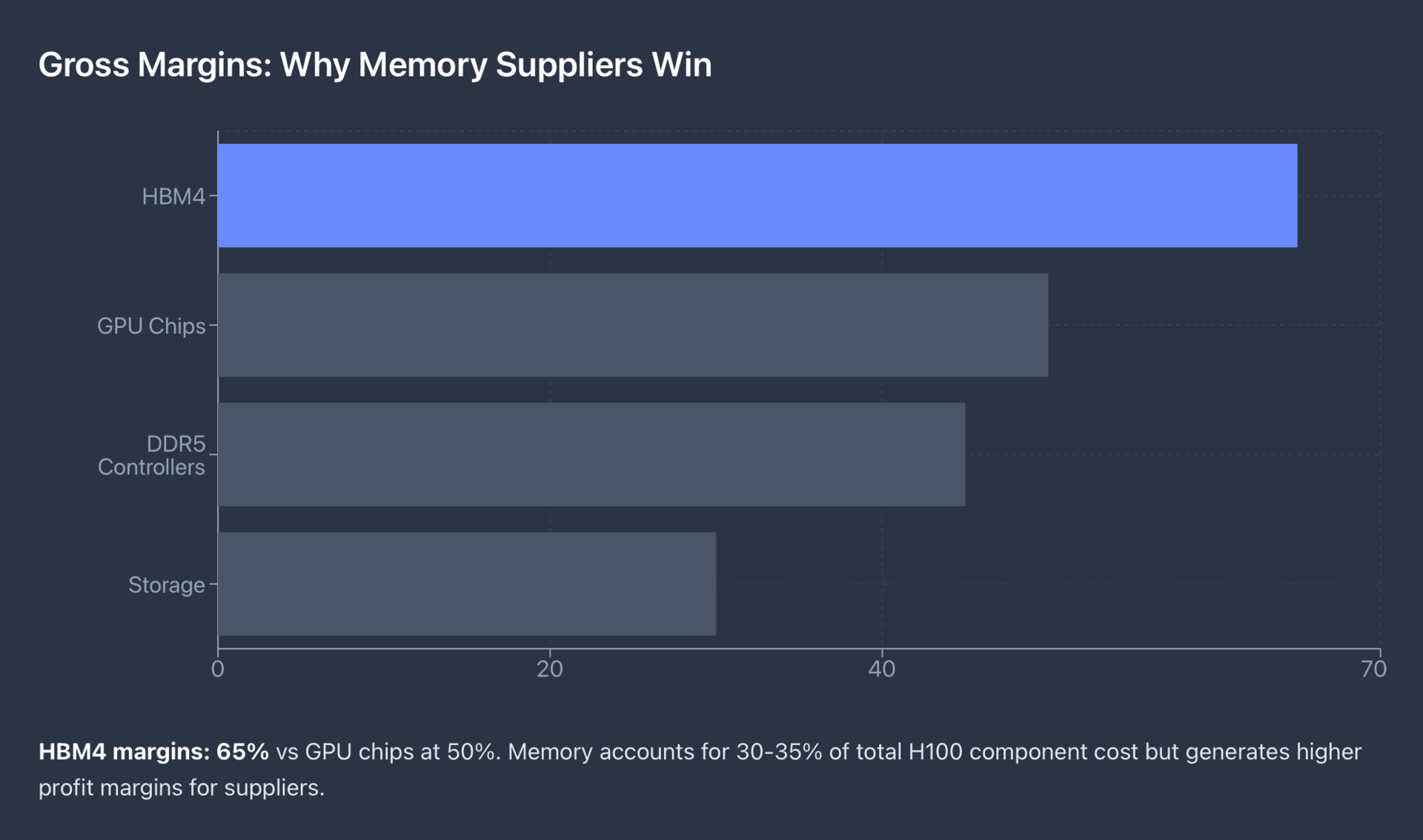

HBM operates at 65% gross margins compared to GPU chips at 50%. That gap matters more than you might think.

A single NVIDIA H100 GPU contains approximately 80GB of HBM3 memory. The memory alone accounts for roughly 30-35% of the total component cost but generates disproportionate profit margins for suppliers.

As AI models scale, GPT-5 will require substantially more memory bandwidth than GPT-4, the memory bottleneck becoming the critical constraint.

Samsung's new AI memory megafactory in South Korea, announced in October 2025, represents a $230 billion investment through 2027. The facility focuses exclusively on HBM production. SK Hynix is investing similar amounts. These capital commitments signal that memory suppliers see sustained demand extending well beyond 2026.

The technology itself creates natural barriers to entry. 16-Hi HBM4 stacking involves bonding 16 layers of DRAM with through-silicon vias, maintaining thermal stability, and achieving data transfer rates exceeding 1.5TB/s. Only three companies have demonstrated production-ready capabilities at this specification level.

The Memory Stack: More Than Just HBM

Understanding the full memory hierarchy reveals additional investment opportunities:

High-Bandwidth Memory $HBM: Samsung, SK Hynix, and Micron manufacture the HBM that sits directly on NVIDIA's GPU packages. This is where AI models "think"—loading weights, computing attention mechanisms, and generating outputs. Current spot market prices for HBM3 exceed $1,200 per unit, up from $750 in early 2025.

Storage-Class Memory: Western Digital and SanDisk supply the petabyte-scale storage systems that house AI training datasets. As models grow larger, the data pipeline from storage to memory to compute becomes increasingly critical. Training GPT-5-class models requires reading trillions of tokens from persistent storage into active memory.

Memory Controllers: Silicon Motion and Rambus design the controller chips that manage data flow between storage, DRAM, and processing units. These companies operate with lower profiles but capture essential margin in the DDR5 transition. DDR5 controllers command 40-50% higher average selling prices than DDR4 equivalents.

Each layer of this stack faces supply constraints in 2026.

ETF Exposure: How to Access the Trend

Three semiconductor ETFs provide differentiated exposure to the memory shortage:

VanEck Semiconductor ETF $SMH carries a 19% allocation to NVIDIA and substantial positions in TSMC, ASML, and Broadcom. The fund returned 32% YTD through January 2026. However, its high concentration in NVIDIA (the largest holding) introduces volatility that correlates directly with AI chip demand cycles. Beta to the broader market exceeds 1.4.

iShares Semiconductor ETF $SOXX maintains a more balanced 12% NVIDIA position with broader exposure to memory manufacturers and equipment suppliers. The fund returned 28% year-to-date with slightly lower volatility than SMH. SOXX includes meaningful positions in Applied Materials and Lam Research—companies that sell the equipment required to build HBM production capacity.

SPDR S&P Semiconductor ETF $XSD takes an equal-weight approach with an 8% NVIDIA allocation. This fund captures smaller memory and storage specialists including Western Digital and smaller controller manufacturers. Returns of 22% year-to-date reflect lower concentration risk but also reduced exposure to the highest-growth segments. XSD functions as a more speculative play on secondary beneficiaries.

The key difference: SMH offers maximum leverage to the NVIDIA-HBM supply chain, while SOXX provides equipment and infrastructure exposure, and XSD captures broader semiconductor trends with lower concentration risk.

What Happens Next

Two catalysts will define the memory market through mid-2026:

Q1 2026: Samsung ramps HBM4 production for NVIDIA's Rubin architecture. Initial volumes will be constrained as Samsung qualifies its 16-Hi stacking process. Any delays in this ramp directly impact NVIDIA's ability to ship next-generation AI accelerators. Investors should monitor Samsung's quarterly earnings for HBM yield rates and capacity utilization metrics.

Q2 2026: NVIDIA launches Rubin with 88 Arm-based cores and exclusive Samsung HBM4 integration. Hyperscaler customers including Microsoft, Google, and Meta have reportedly pre-ordered thousands of systems. But Rubin's success depends entirely on Samsung's ability to deliver HBM4 at volume.

Real talk: Is Jensen Huang losing sleep over Samsung's HBM4 production schedule?

The memory shortage creates a straightforward investment thesis: companies controlling HBM supply will capture outsized margins as demand exceeds supply. The mathematics of semiconductor fabrication timelines mean this shortage cannot resolve quickly even with aggressive capital investment.

Practical Approach for Investors

Set up a Google Alert for "HBM shortage" to track supply developments in real-time. Memory supply chain disruptions often surface in trade publications weeks before they reach mainstream financial media.

Consider a 20% allocation to SMH for direct NVIDIA-memory exposure, balanced with a 10% position in SOXX for equipment and infrastructure plays. This provides leverage to the memory supercycle while reducing single-stock concentration risk.

The 2026 AI memory shortage is not a temporary phenomenon. It represents a structural shift where memory suppliers capture increasing value in the AI compute stack.

Samsung, SK Hynix, and Micron are not just NVIDIA suppliers, they are critical bottlenecks in the entire AI infrastructure buildout.

And bottlenecks generate pricing power.

What's catching investor attention today: Donut Lab 5-Minute EV Charging Hits Production. Battery Stocks Haven't Priced It In

Disclaimer: This is not financial or investment advice. Do your own research and consult a qualified financial advisor before investing.